Short answer, NO. Before you start cursing and calling me an idiot, read on to know the long version of the answer.

I know we've all been taught all our lives that computers understand machine code which is represented in binary form-0s and 1s-but what if that was an attempt to oversimplify things? Okay, let's begin with an introduction to number systems.

Number systems is a way to represent numbers. There are several known number systems:

- Decimal-base 10.

- Hexadecimal-base 16.

- Ternary-base 3.

- Binary-base 2, etc.

We already know that the standard number system for human communication is decimal, and we already understand “counting” in it. Let’s skip all the history about how the speakers of the Ali language in Central Africa long ago unintentionally decided on this number system being the standard before the discovery of other number systems(Read Georges Ifrah’s ‘History of numbers’ for more details on that). The decimal system rules over a domain of numbers ranging from 0-9 where once you count to 9 and exhaust all possible values provided by the number system, you start over and add 1 to the left side. Like: 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20,... etc.

In the case of binary, since the number system contains only 0 and 1, once these two are exhausted, the beginning before the last add is repeated with 1 added to the left. Like: 0, 1, 10, 11, 100, 101, 110, 111, 1000, 1001, 1010, 1100, 1101, 1110, 1111, 10000,... etc.

Basically, this is how ‘counting’ happens in binary. But some of you already know this and have wondered how possible it is that the computer understands this, since 0 and 1 are english numbers and the computer isn’t exactly a native English speaker.

That’s where microprocessors and micro transistors come to play in computing.

The microprocessor is an integrated circuit (or chip) in the computer that is responsible for performing all the processing done by the computer. It is made up of tiny switches called micro transistors. Yes, switches.

Think of it like the switches in your homes(although they are structured vastly differently). They have only two states; on or off. The continuous switching of the micro transistors between on and off within split seconds in the microprocessor is how manipulation of electricity in the computer carries out commands. After all, the computer is an electronic device. Everything exists in form of electricity; the data, the commands, power, etc.

Back to binary. The two possible values 0 and 1 are what we humans use to represent the two possible states of the micro transistors; on and off.

Now let’s briefly look at something more interesting. ASCII.

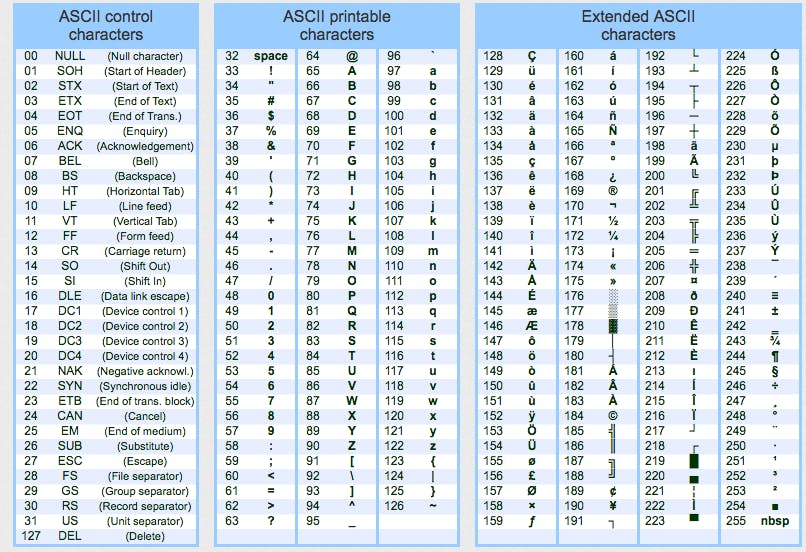

ASCII stands for American Standard Code for Information Interchange. It was first developed and published in 1963 by the X3 committee, a part of the American Standards Association for teletype writers (originally). It’s a standard that maps characters to certain decimal numbers which will be converted to binary by the computer. So based on the image below...

“A” is represented by 65 in decimal, which when converted to binary is 0100001.

So when you type “A” in your keyboard, somewhere in the microprocessor of that computer, there are 8 transistors arranged in this manner: off, on, off, off, off, off, off, on. I know you’re beginning to wonder “just how many transistors are in these processors?!”. Well, each processor CAN carry up to billions of micro transistors. So, yeah, that’s how you get to play 3d games on your computer seamlessly without lags or how you watch high-definition movies without cracks.

So in essence, the computer doesn’t really ‘understand’ 0 and 1, it only knows ‘on’ and ‘off’. Imagining how boring it’s life is makes me want to feel sorry for it, LOL.

Very Important Note! Please, guys. This is my very first blog post here and I'd really appreciate if you'd give me feedback on how understandable my writing style is. It's very important as it'll help show me how to better explain complex computer science concepts. You can contact me on Twitter through the handle @mr_dondaniel .